Analysis of A/B Testing for New Feature in Microsoft Viva Engage

Key Learnings:

1. SQL query writing for Data Analysis

2. Analysing and interpreting A/B testing

Project Overview:

This project entails the analysis of an A/B test conducted on a new feature in Microsoft Viva Engage, a social network designed for effective communication among coworkers. The primary goal is to validate the results of the A/B test by analyzing the data to better understand the outcomes and ensure accurate interpretation.

Dataset and Tools

A SQL dataset similar to the structure of actual Microsoft Viva Engage data is used for this case study. PostgreSQL is utilized for carrying out the required data analysis.

Observation

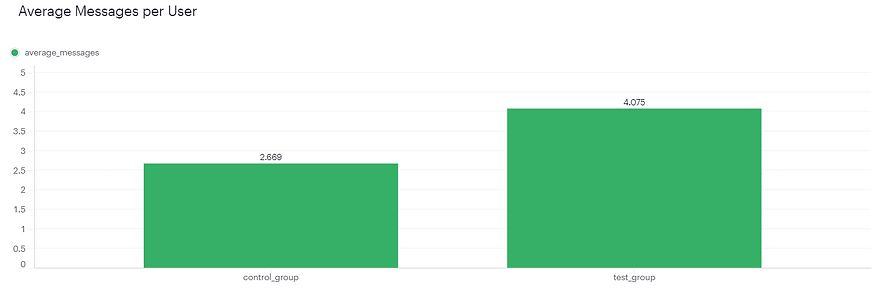

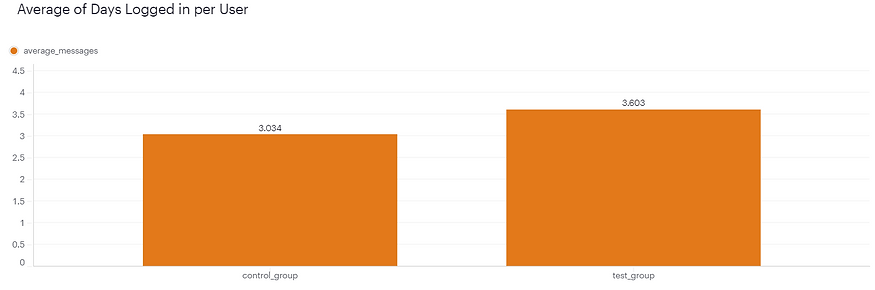

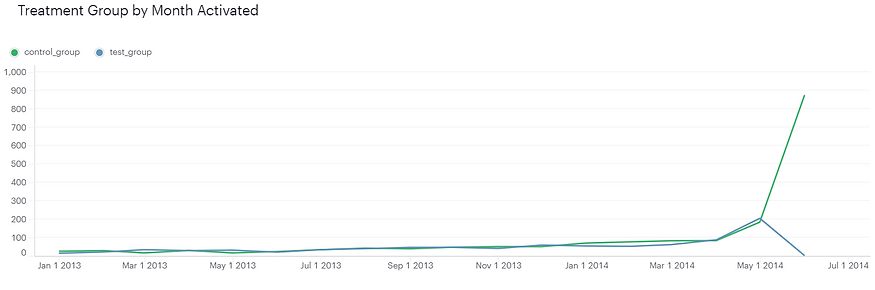

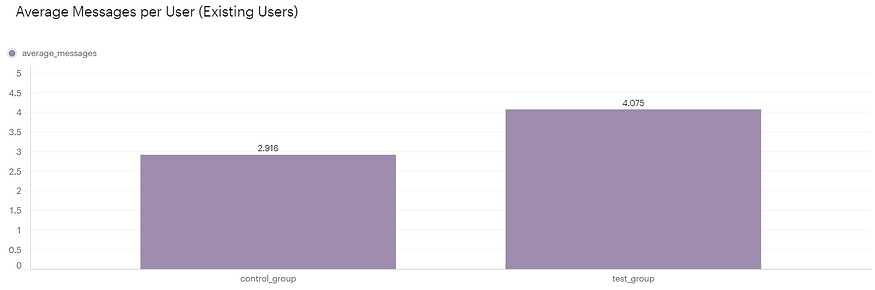

A new feature, an improvement to the module where users type their messages, was introduced in Microsoft Viva Engage. An A/B test was conducted for a month to evaluate this feature. During this period, some users were shown the old version of the module (the “control group”), while others were shown the new version (the “treatment group”). The results after a month, as visualised below, revealed a 50% increase in message posting in the treatment group. While this is a significant increase, further analysis is needed to ascertain if the new feature truly contributed to this growth or if there are other factors at play.

Implementation Plan

-

Check additional metrics: It's essential to examine other metrics besides average messages to ensure that the observed increase is not limited to just one aspect. Identifying and analyzing additional important metrics will provide a more comprehensive understanding of the impact of the new feature.

-

Investigate user treatment: A thorough review of the assignment of users to test and control groups is necessary. Any inaccuracies or discrepancies in the data need to be identified and addressed to ensure the validity of the results.

-

Recommendations: Based on the conclusions drawn from the analysis, final recommendations will be made. These recommendations may include whether to roll out the new feature to all users, conduct further testing with adjustments, or consider abandoning the feature altogether.

Additional Metrics

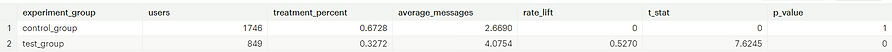

In evaluating the effectiveness of a new feature implementation in Viva Engage, it was crucial to move beyond mere message posting rates and delve into metrics that reflect user engagement and value derived from the platform. The analysis indicates a notable increase in the average number of logins per user, implying a rise not only in message activity but also in user engagement with Yammer.

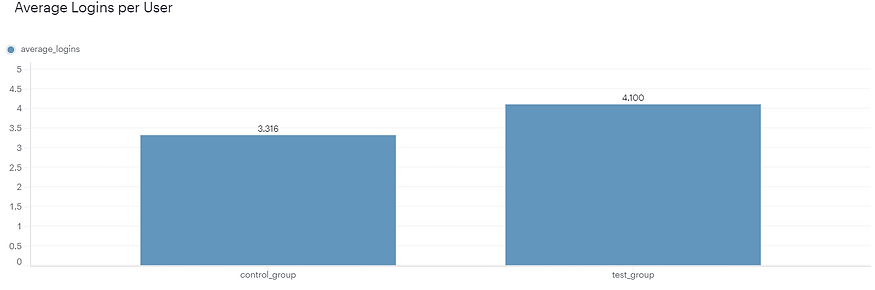

Analysis also revealed an uptick in the number of distinct days users logged in, indicating heightened user activity and sustained usage. This comprehensive approach to metrics assessment suggests that the new feature positively influenced user behavior across various dimensions.

User Treatment Investigation

Users are ideally assigned to test treatments randomly, ensuring a fair and unbiased experiment. However, bugs or technical glitches can sometimes disrupt this process, leading to incorrect user assignment. When users are treated incorrectly, the randomness of the experiment is compromised, potentially invalidating the results.

The test has a problem because it mixes new and existing users together. It compares their message activity during the test period without considering how long they've been using Viva Engage. It would be better to separate new and existing users to see if the new feature affects them differently. For example, existing users might try the new feature out of curiosity, while new users might not be as interested because everything is still new to them. When we looked closer, we found that all new users were put in the control group.

As evident from the data below, when focusing solely on posts from existing users, the test results become significantly narrower.

Recommendations/Results

The test outcomes remain robust overall. However, given the narrower results seen when analyzing posts from new users exclusively, it's essential to validate this trend across different user cohorts. Furthermore, rectifying the logging error, which grouped all new users together, is necessary to ensure accurate testing in the future.

Conclusion

In conclusion, while the initial findings of the A/B test suggest a positive impact of the new feature on user engagement, a closer examination reveals methodological errors that warrant attention. By segregating new and existing users and addressing logging discrepancies, we can refine our analysis and ensure more accurate testing outcomes. Moving forward, it's imperative to validate the observed changes across diverse user cohorts to make informed decisions regarding the deployment of the new feature within Microsoft Viva Engage.